Problems tagged with "linear regression"

Problem #003

Tags: linear regression, least squares

Suppose a line of the form \(H(x) = w_0 + w_1 x\) is fit to a data set of points \(\{(x_i, y_i)\}\) in \(\mathbb R^2\) by minimizing the mean squared error. Let the mean squared error of this predictor with respect to this data set be \(E_1\).

Next, create a new data set by adding a single new point to the original data set with the property that the new point lies exactly on the line \(H(x) = w_0 + w_1 x\) that was fit above. Let the mean squared error of \(H\) on this new data set be \(E_2\).

Which of the following is true?

Solution

\(E_1 > E_2\)

Problem #062

Tags: linear regression, least squares

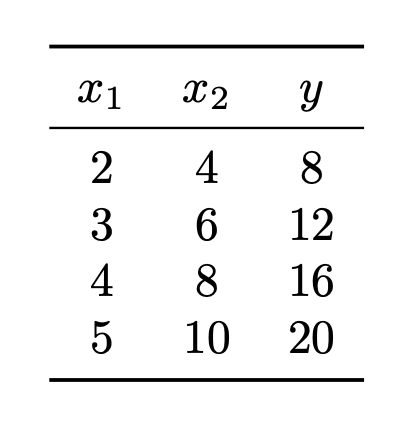

Suppose a linear regression model \(H(\vec x) = w_0 + w_1 x_1 + w_2 x_2\) is trained by minimizing the mean squared error on the data shown below, where \(x_1\) and \(x_2\) are the features and \(y\) is the target:

True or False: there is a unique weight vector \(\vec w = (w_0, w_1, w_2)^T\) minimizing the mean squared error for this data set.

Solution

False.

\(y\) can be predicted exactly using only \(x_1\). In particular, \(y = 4x_1\). So the weight vector \(\vec w = (0, 4, 0)^T\) is one that minimizes the mean squared error.

On the other hand, \(y\) can also be predicted exactly using only \(x_2\). In particular, \(y = 2x_2\). So the weight vector \(\vec w = (0, 0, 2)^T\) is another that minimizes the mean squared error.

In fact, there are infinitely many weight vectors that minimize the mean squared error in this case. Geometrically, this is because the data are all along a 2-dimensional line in 3-dimensional space, and by doing regression we are fitting a plane to the data. There are infinitely many planes that pass through a given line, each of them with a different corresponding weight vector, so there are infinitely many weight vectors that minimize the mean squared error.

Now you might be thinking: the mean squared error is convex, so there can be only one minimizer. It is true that the MSE is convex, but that doesn't imply that there is only one minimizer! More specifically, any local minima of a convex function is also a global minimum, but there can be many local minima. Take, for example, the function \(f(x, y) = x^2\). The point \((0, 0)\) is a minimizer, but so is \((0, 2)\) and \((0, 100)\), and so on. There are infinitely many minimizers!